Author: admin

The Limitations of Construct Validity and How to Address Them in Your PhD Research

01/03/2025

Construct validity is a cornerstone of rigorous academic research, especially in the social sciences, psychology, education, and other fields that rely on abstract theoretical concepts. As a PhD student, ensuring construct validity in your study is essential for producing credible, impactful findings. However, the concept of construct validity is not without limitations. Understanding these limitations and learning how to address them will help you design a robust research framework and contribute meaningful insights to your discipline.

Understanding Construct Validity

Construct validity refers to the extent to which a test, instrument, or experimental design measures the theoretical construct it is intended to measure. Constructs are abstract ideas or phenomena that cannot be directly observed, such as intelligence, motivation, or satisfaction. Researchers rely on operational definitions and measurable proxies to study these constructs.

For instance, if you are studying “academic motivation,” you might use a survey to assess students’ self-reported motivation levels. The survey’s construct validity depends on how well it captures the true nature of academic motivation.

Construct validity has two key components:

- Convergent Validity: Demonstrates that the construct correlates strongly with other measures that assess similar constructs.

- Discriminant Validity: Ensures the construct is distinct from other unrelated constructs.

Limitations of Construct Validity

Construct validity is not infallible. Several limitations arise from its theoretical complexity, reliance on subjective interpretation, and practical challenges in operationalizing constructs. Below, we discuss these limitations in detail.

1. Abstract Nature of Constructs

Many constructs in academic research are inherently abstract and multifaceted. For example, constructs like “leadership” or “resilience” encompass multiple dimensions, such as behavior, cognition, and emotional components. As a researcher, you must distill these dimensions into measurable variables. However, this simplification risks omitting important nuances of the construct.

Implication for PhD Research:

Overlooking the complexity of constructs can lead to incomplete or biased interpretations. If your operational definitions do not capture the full scope of the construct, your findings may lack depth and generalizability.

2. Subjectivity in Operational Definitions

The process of defining and measuring constructs often relies on subjective judgment. Researchers choose indicators and tools based on their interpretations of the construct, which introduces potential biases. For instance, two researchers studying “job satisfaction” might select different survey items, leading to inconsistent results.

Implication for PhD Research:

Inconsistencies in operational definitions can hinder replication and comparability across studies. This poses a challenge for PhD students striving to build on existing research or establish a solid theoretical foundation.

3. Measurement Error

Measurement tools used to operationalize constructs are rarely perfect. Surveys, tests, and observational methods are prone to errors, including response bias, social desirability bias, and instrument limitations. These errors undermine the accuracy of construct measurement and threaten construct validity.

Implication for PhD Research:

Measurement error reduces the reliability and validity of your data, potentially leading to flawed conclusions. This is especially critical for PhD students, as doctoral research often forms the basis for future academic work.

4. Context Dependency

The meaning and relevance of constructs often depend on cultural, social, and temporal contexts. For example, a construct like “leadership effectiveness” may vary significantly between organizational cultures in different countries or industries. Such contextual variability makes it difficult to develop universally valid measures.

Implication for PhD Research:

Ignoring context can result in overly narrow findings or limit the applicability of your research to diverse populations. PhD students must strike a balance between specificity and generalizability.

5. Difficulty in Demonstrating Causality

Construct validity alone does not establish causal relationships between constructs. For example, while your study may show that “team cohesion” and “performance” are correlated, it cannot confirm that cohesion causes better performance without additional evidence. This limitation underscores the importance of using complementary validity types, such as internal and external validity.

Strategies to Address Construct Validity Limitations

While the limitations of construct validity are significant, they are not insurmountable. Here are practical strategies to address these challenges in your PhD research.

1. Thorough Literature Review

A comprehensive literature review is essential for understanding your construct and its existing operational definitions. By examining previous studies, you can identify best practices, refine your measurement approach, and avoid common pitfalls.

Implementation Tip:

Use meta-analyses and systematic reviews to identify validated instruments and methodologies. This will help you justify your choices and ensure consistency with existing research.

2. Multi-Method Approach

Triangulating data using multiple methods enhances construct validity. For example, combine self-report surveys with behavioral observations or physiological measures to capture different dimensions of the construct.

Implementation Tip:

Design a mixed-methods study that integrates qualitative and quantitative approaches. This can provide richer insights and mitigate the limitations of any single method.

3. Pilot Testing

Pilot testing your instruments is a crucial step to identify and address potential weaknesses. It allows you to evaluate the clarity, reliability, and validity of your measures before conducting the main study.

Implementation Tip:

Administer your instruments to a small sample similar to your target population. Use feedback to refine your operational definitions and address ambiguities.

4. Establishing Convergent and Discriminant Validity

To strengthen construct validity, demonstrate that your measures correlate appropriately with related constructs (convergent validity) and do not overlap with unrelated constructs (discriminant validity). This can be achieved through statistical techniques like confirmatory factor analysis.

Implementation Tip:

Report validity evidence in your thesis or publications to bolster the credibility of your research. Discuss how your measures align with theoretical expectations.

5. Account for Context

Contextualize your constructs and measures by considering cultural, social, and temporal factors. This ensures that your research findings are relevant and applicable to the populations you study.

Implementation Tip:

If your study involves diverse populations, adapt your instruments to reflect cultural norms and values. Conduct cross-cultural validation to confirm their suitability.

6. Collaborate with Experts

Consulting domain experts can provide valuable insights into your construct and measurement approach. Experts can help you refine your definitions, select appropriate instruments, and interpret results.

Implementation Tip:

Engage in interdisciplinary collaboration to incorporate diverse perspectives and expertise. This can enrich your theoretical framework and methodological rigor.

Applying These Strategies in Your PhD Research

Addressing the limitations of construct validity requires a proactive and iterative approach. Start by clearly defining your constructs and aligning them with your research objectives. Use validated instruments whenever possible, and justify your methodological choices in your dissertation.

Remember that construct validity is not an isolated concept. It intersects with other validity types, such as content, criterion-related, and internal validity. As a PhD student, your goal should be to design a study that integrates these validity types to produce robust, reliable, and impactful research findings.

By acknowledging the limitations of construct validity and implementing strategies to address them, you can enhance the quality of your doctoral research and make a meaningful contribution to your field.

Construct validity is a critical but complex aspect of academic research. Its limitations—ranging from the abstract nature of constructs to measurement error—pose significant challenges, particularly for PhD students navigating the research process. However, these challenges also present opportunities for innovation and methodological rigor. By conducting thorough literature reviews, using multi-method approaches, pilot testing instruments, and contextualizing your constructs, you can mitigate these limitations and strengthen your research design.

The C.A.R.S. Model to Framing and Revising Your Introduction Section

28/05/2024

Work well begun is half done. Same applies to research papers, and poorly drafted introductions definitely damages the response towards the research paper during peer review. The traits of a poor introduction are that they are excessively broad, vague and at the same time lack context as well as a background. Unlike well-made introductions, poor introductions might ignore the research gap and they rather focus on the work of the author and not the field. One must also be cautious of not using too many jargons or unsupported statements in the introduction section, making the section unsupported, long, repetitive and vague at the same time.

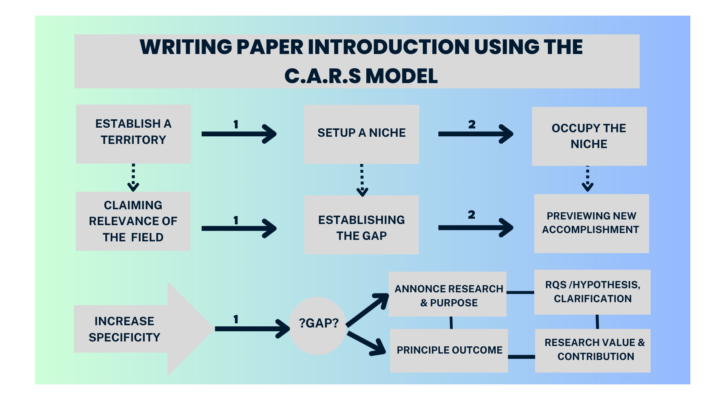

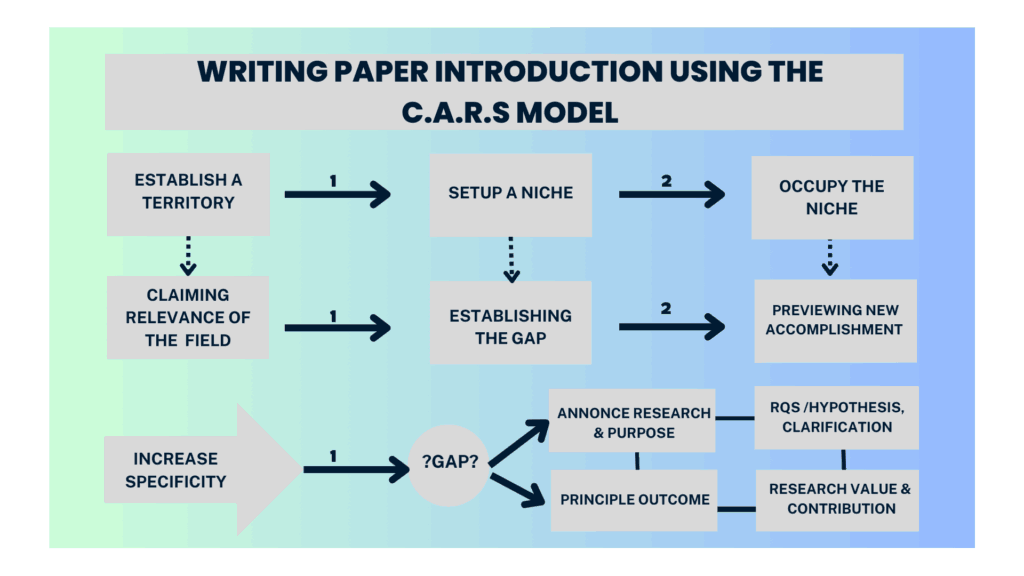

Now, the question arises, how to design and draft a good introduction section. Let us discuss about the Create a Research Space (CaRS) framework created by John Swales. Let us begin by having a look at the flowchart talking about the workflow of the CaRs Model

The Workflow for the CARS model.

When writing a research paper, the introduction serves as a critical component. It will establish the rhythm of the entire paper and at the same time hold the attention of the reader by generating importance and curiosity towards the research paper and give an overview of not just the purpose of the paper as well as its key findings.

Ad evident from the above flowchart, the model comprises the following stages

Establish a Territory

Set up a Niche

Occupy the Niche

By focusing on these three moves step by step and graduating to the next level in a sequential manner the three important aspects of the introduction can get covered.

Research Question

Context

Persuading the audience

a) Research question: It is the foundation of the study and time should be invested in making a relevant research question. It will create an opportunity for you to find all the information that you need and at the same time create the scope of the study and the data requirements. Hence the study question has to be focused, succinct and at the same time practical.

b) Context: Context paves the path for creating comprehension and it can assist people in understanding as well as following your message. Before you dive deep into the details, give the background of the study so that the reader is able to grasp the relevance of the message. Always remember to create your context keeping in mind the knowledge level as well as requirement of your reader. Offering a context always helps audience to understand your message better.

c) Persuade your audience: The best way to convince your audience is to gain credibility of win the trust of the reader. That can be done by showing your experience, qualification as well as the understanding of the subject matter. It should address the wants of the audience. As a writer remember to take the emotional perspective into consideration and motivate the readers by connecting with their emotions. You may have to use descriptions, anecdotes and sometimes even emotional language to do this. In this effort, don’t forget that research is based on facts and here trust is built on statistics, expert opinion and real examples.

Python for Simulations and Modeling in PhD Research: Advantages and Applications

23/05/2023

Simulations and modeling are essential tools in PhD research, enabling researchers to investigate complex phenomena, predict outcomes, and gain valuable insights. Python has emerged as a preferred language for conducting simulations and modeling in diverse fields of study. Its versatility, extensive libraries, and ease of use have made it a powerful tool for researchers seeking to simulate and model intricate systems. This blog will explore the advantages and applications of using Python for simulations and modeling in PhD research.

Furthermore, the availability of Python implementation service providers can assist researchers in streamlining their projects, providing expertise in Python programming, and ensuring efficient and effective implementation of simulations and models. Python implementation service offers valuable assistance to researchers, ensuring that their simulations and modeling efforts are optimized and deliver accurate results.

Application

Examples of Python for Simulations and Modeling in PhD Research:

Physics and Engineering: Python is widely used in simulating physical systems, such as quantum mechanics, fluid dynamics, and electromagnetism. Researchers can employ Python libraries like SciPy, NumPy, and SymPy to solve differential equations, perform numerical simulations, and analyze experimental data. For example, Python can be utilised to simulate the behavior of particles in particle physics experiments or model the flow of fluids in engineering applications.

Biology and Computational Biology: Python finds extensive applications in modeling biological systems and conducting computational biology research. Researchers can leverage Python libraries like Biopython and NetworkX to simulate biological processes, analyze genetic data, construct gene regulatory networks, and perform protein structure predictions. Python’s flexibility and ease of integration with other scientific libraries make it ideal for complex biological simulations.

Prior research

Title: “DeepFace: Closing the Gap to Human-Level Performance in Face Verification”

Summary: This research paper introduced DeepFace, a deep-learning framework for face verification. Python was used to implement the neural network models and train them on large-scale datasets.

Title: “TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems”

Summary: This paper presented TensorFlow, a popular open-source machine learning framework. Python was used as the primary programming language for building and deploying machine learning models using TensorFlow.

Title: “PyTorch: An Imperative Style, High-Performance Deep Learning Library”

Summary: This research introduced PyTorch, another widely used deep learning library. Python was the primary language for implementing PyTorch’s computational graph framework and training deep neural networks.

Title: “Natural Language Processing with Python”

Summary: This research book focused on natural language processing (NLP) techniques using Python. It explored various NLP tasks, such as tokenization, part-of-speech tagging, and sentiment analysis, and implemented them using Python libraries like NLTK and spaCy.

Title: “NetworkX: High-Productivity Software for Complex Networks”

Summary: This research paper introduced NetworkX, a Python library for studying complex networks. It provided tools for creating, manipulating, and analyzing network structures, enabling researchers to explore network science and graph theory.

Title: “SciPy: Open Source Scientific Tools for Python”

Summary: This paper described SciPy, a scientific computing library for Python. It covered a wide range of topics, including numerical integration, optimization, signal processing, and linear algebra, all implemented in Python.

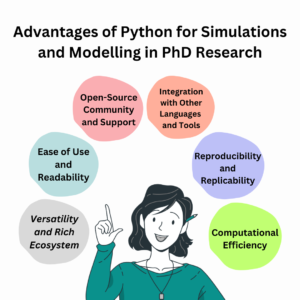

Advantages

Python offers several advantages for simulations and modeling in PhD research. Here are some key advantages:

Versatility and Rich Ecosystem: Python is a highly versatile programming language with a vast ecosystem of libraries and frameworks specifically designed for scientific computing, simulations, and modeling. Libraries such as NumPy, SciPy, Pandas, and Matplotlib provide robust tools for numerical computations, optimization, data manipulation, and visualization, making Python a powerful choice for PhD research.

Ease of Use and Readability: Python has a clean and readable syntax, making it easier to write, understand, and maintain code. It’s simplicity and high-level nature allow researchers to focus more on the problem at hand rather than getting lost in complex programming details. Python’s readability also enhances collaboration, as it is easier for others to review and understand the code, facilitating reproducibility and sharing of research findings.

Open-Source Community and Support: Python has a large and active open-source community, which means that researchers have access to a wide range of resources, forums, and documentation. This community actively develops and maintains numerous scientific libraries and packages, providing continuous improvements, bug fixes, and new features. It also enables researchers to seek help, share code, and collaborate with experts in their respective domains.

Integration with Other Languages and Tools: Python’s flexibility extends to its ability to integrate with other languages and tools. Researchers can easily combine Python with lower-level languages like C and Fortran to leverage their performance benefits. Additionally, Python can be seamlessly integrated with popular simulation and modeling software, such as MATLAB, allowing researchers to leverage existing tools and codes while benefiting from Python’s versatility.

Reproducibility and Replicability: Python’s emphasis on code readability and documentation contribute to the reproducibility and replicability of research. By using Python for simulations and modeling, researchers can write clean, well-documented code that can be easily understood and reproduced by others. This fosters transparency in research and allows for independent verification and validation of results.

Computational Efficiency: While Python is an interpreted language, it offers several tools and techniques to optimize performance. Libraries like NumPy and SciPy leverage efficient algorithms and data structures, while packages such as Numba and Cython enable just-in-time (JIT) compilation and speed up computationally intensive parts of the code. Additionally, Python provides interfaces to utilize multi-core processors and distributed computing frameworks, allowing researchers to scale their simulations and models to large-scale systems.

Verification, validation, and uncertainty quantification of Python-based simulations and modelling

Verifying, validating, and quantifying uncertainties in Python-based simulations and modelling for PhD research involves a comprehensive process to ensure the reliability, accuracy, and credibility of your simulations and models. Here is a general framework to guide you through the steps:

Define verification, validation, and uncertainty quantification (VVUQ) objectives: Clearly articulate the specific objectives you want to achieve in terms of verification, validation, and uncertainty quantification. Determine the metrics and criteria you will use to assess the performance and reliability of your simulations and models.

Develop a verification plan: Establish a plan for verifying the correctness and accuracy of your Python-based simulations and models. This may involve activities such as code inspection, unit testing, and comparison against analytical solutions or benchmark cases. Define the specific tests and criteria you will use to verify different aspects of your simulations and models.

Conduct code verification: Perform code verification to ensure that your Python code accurately implements the mathematical and computational algorithms of your simulations and models. This can involve using analytical solutions or simplifying cases with known results to compare against the output of your code.

Plan the validation process: Develop a validation plan to assess the accuracy and reliability of your simulations and models by comparing their results against experimental data, empirical observations, or field measurements. Define the validation metrics, experimental setup, and data requirements for the validation process.

Gather validation data: Collect or obtain relevant experimental data or observations that can be used for the validation of your simulations and models. Ensure that the validation data covers the same phenomena or scenarios as your simulations and models and is representative of real-world conditions.

Perform model validation: Run your Python-based simulations and models using the validation data and compare the results with the corresponding experimental data. Assess the agreement between the simulation results and the validation data using appropriate statistical measures and visualization techniques. Analyze and interpret any discrepancies or deviations and identify potential sources of error or uncertainty.

Quantify uncertainties: Identify the sources of uncertainties in your simulations and models, such as input parameter variability, measurement errors, or modeling assumptions. Implement techniques for uncertainty quantification, such as Monte Carlo simulations, sensitivity analysis, or Bayesian inference, to characterize and propagate uncertainties through your simulations and models.

Validate uncertainty quantification: Validate the uncertainty quantification process by comparing the estimated uncertainties against independent measurements or other sources of information. Assess the reliability and accuracy of your uncertainty estimates and refine them if necessary.

Document and report: Document your verification, validation, and uncertainty quantification processes, methodologies, and findings. Clearly describe the steps taken, the results obtained, and the conclusions drawn from the VVUQ analysis. Communicate your findings through research papers, reports, or presentations to demonstrate the credibility and robustness of your Python-based simulations and models.

Continuously update and improve: VVUQ is an iterative process, and as new data, insights, or techniques become available, it is important to continuously update and improve your simulations and models. Incorporate feedback, learn from validation exercises, and refine your simulations and models to enhance their accuracy, reliability, and usefulness for your PhD research.

Why Is Python Good For Research? Benefits of the Programming Language

Python is widely recognized as a popular programming language for research across various fields. Here are several reasons why Python is considered beneficial for research:

Ease of use and readability: Python has a clean and intuitive syntax, making it easy to learn and use, even for individuals with minimal programming experience. Its readability and simplicity enable researchers to quickly prototype ideas, experiment with algorithms, and focus on the research problem rather than struggling with complex programming concepts.

Extensive scientific libraries: Python offers a rich ecosystem of scientific libraries and frameworks that cater specifically to research needs. Libraries such as NumPy, SciPy, pandas, and scikit-learn provide powerful tools for numerical computing, data analysis, machine learning, and statistical modeling. These libraries streamline research tasks, eliminate the need to reinvent the wheel and enable researchers to leverage existing optimized functions and algorithms.

In conclusion, Python has proven to be a valuable asset in simulations and modeling for PhD research, offering a wide range of advantages and applications. Its ease of use, extensive scientific libraries, interoperability, and supportive community make it an excellent choice for researchers seeking to develop accurate and efficient simulations. Python’s versatility allows for seamless integration with other languages and tools, further enhancing its capabilities in interdisciplinary research. Moreover, the availability of Python implementation service providers can significantly support researchers, providing expertise and assistance in implementing simulations and models. With Python’s robust ecosystem and collaborative nature, researchers can explore new frontiers, tackle complex problems, and contribute to advancements in their respective fields through simulations and modeling.

If you want our help in this regard, then you can visit our website https://www.fivevidya.com/python-projects.php to learn more.

Thank you for reading this blog.

The Challenges in Writing a Multi Authored Academic Publication, More Co-authors for your research paper.

03/04/2023

Co authorship is when two or more authors collaborate to publish a research article. There has been a significant increase in multi disciplinary research which has also expanded multi authored academic research in the past few years. There are a variety of reasons for this growth, most commonly researchers attribute this growth to the incremented pressure of publication for career growth and promotions. With such a requirement, co authorship is often a lucrative return on investment. Another reason because of which collaborative writing

has increased are, better opportunities for co authorship as networks have increased and advances in technological developments have made it very convenient to work on the same text electronically.

Multiple authorship can be useful for the authors in various ways but it comes with its own set of challenges and conflicts. The various issues that can flare up in multi authored research need to be addressed so as to pre warn the authors to avert possibility of disputes.

There are few questions that arise upfront the moment we talk of co authorship in research.

Amongst the first few ones are:

a) What should be the order of names in the paper? The names should be listed on the criterion of contribution in the paper, seniority, alphabetically or any other logic should be applied to decide the first position in the names of the authors?

b) How to correctly justify the contribution of an academic colleague to grant him authorship credit. The question becomes more explicit. When we are talking in context of a PhD supervisor or research grant holder whose name sometimes is added merely by the virtue of their position.

These questions cannot be ignored as the credits of an author are a very crucial criterion for professional growth, getting funding and at the same time it is an important concern of publishing ethics.

Challenges associated with Co-Authorship

Paucity of appropriate training and guidance

Most of the researchers do not receive any training on co authorship and at the same time they also feel that the institution does not offer any special support for any kind of collaborative writing.

Confusion and Dissatisfaction:

Many researchers do not have enough clarity about this practice of co authorship and its resulting implications. Moreover, the increasing concept of co authorship has increased the intervention of senior professors and project guides in getting their names attached when a

paper comes to them for review. Senior academicians often get over credited and the junior ranked scholars and academicians do not get their dues for the amount of effort they put in. This leads to a general trend of dissatisfaction and confusion amongst the budding

researchers to emphasise towards co authorship.

Lack of Co authorship policies:

Institutions do not have co authorship policies and ethics placed in a structured manner which can help researchers to overcome the issues that overwhelm them in this regard. Institutions need to create a framework for co authorship policies and disseminate them amongst the

fraternity so that the best practices in the field are encouraged.

Acceptance of Diversity of work styles:

Not all researchers can have the same work style and when the author are three or more, clashes in work styles are imperative. It requires a whole lot of adjustment to get along with the working styles of other researchers. Most of the time this adjustment is accepted to be

from the end of the junior researchers where the senior professors and research guides don’t show any flexibility in their working styles with other budding researchers.

Miscommunication amongst the authors

The English saying, “ Too many cooks spoil the broth” applies pretty well in the concept of co-authored research tools. Clashes and miscommunication on the grounds of various factors is bound to create friction amongst the authors. All the details and approach should be

decided well in advance before starting to write the paper. This can surely minimise the complications that can arise because of miscommunication, but still there is always scope for friction in some form or the other Inappropriate writing tools or approaches:

Not all authors who choose to write under collaboration can have the same command over research tools or even gel into having the same approach towards writing. This can disrupt the uniformity of the paper in terms of the quality as well as the style for research. It not only affects the writing quality but many a times even frustrates the authors when they feel that the contribution of the other author is affecting the outcome of the research.

10 Commandments of Co-Authorship

A multi authored paper can be a outcome of a common discrete research project or even at times a much larger project that incorporates different papers based on common data or methods. It calls for a meticulous planning and collaboration amongst team members. The commandments of co authorship brought forth here can be applicable at the planning as well as the writing stage of the paper. The authors can come back to the rules from time to time for reiteration and it can surely build a foundation of a constructive research.

Commandment 1:

Contemplate well in choosing your co-authors The writing team is formulated at the formative stage of writing the paper. The pre requisite for choosing co-authors should be expertise and the interest area of research of the co- authors. This can be done by understanding the expectations of all the contributors in research and set up a research goal and also see that the researchers are up to working collectively towards the common goal. This step needs to be headed by the project leader who needs to identify the varied expertise required for making the paper. And also define the criterion that can be applied for qualifying as a co-author. There can be a situation of doubt in selecting co-authors sometimes and being inclusive in approach here does the trick. The roles

and responsibilities of the different authors can also be broadly defined at this stage.

Commandment 2:

Proactive Leadership strategy

A proactive leader is critical for a multi-authored paper so that the outcome is satisfactory in nature. The leader, who most of the time is the first author in the research paper should follow the approach of consensus building and not hierarchical approach while parallelly

working on the manuscript structure and keeping an overall vision of the paper alive.

Commandment 3:

An effective Data Management Plan(DMP)

A DMP that has been created and circulated at the initial level of the paper creation should have the approval of all co-authors. The DMP is a broad outline stating the way in which the data will be shared, versioned, stored and curated and the access to the data will be given to which all authors at different stages of the research project. The DMP should be a simple document but detailed and should be summarized in a couple ofparagraphs. All the data providers and co-authors should agree with the DMP confirming that it has the consensus of the institutional requirements as well as the funding agency.

Commandment 4:

Clearly define the authorship prerequisites and guidelines

Transparent authorship guidelines help to avert confusions and misunderstanding at all stages of the research. Depending on the amount of contribution by the author, the author order can be created with the person who contributed the most being right in the front. In situations where all authors have contributed equally alphabetical order can be used to create the order. At times some contributors might be there who do not meet the author expectations and predefined conditions. The alternative for them is to be included in the list of contributors in acknowledgement. The author order must be revisited and re considered during the process when the roles and responsibilities keep changing.

Commandment 5:

Give framework of writing strategy

He writing strategy that you decide for your co-authored article should be adopted according to the needs of the team. There can be one principle writer for the paper if the data is wide ranging in nature. The most commonly used strategy is when the paper is split into separate sub sections and the different authors are held responsible for sections based on their individual expertise and interest. Whatever may be the strategy it should. Be all inclusive of the co-authors, engaging them from the narrative stage to final structuring of the paper. Thiss urely helps in case the need arises to rewrite any part of the paper at any given later stage.

Commandment 6:

Choosing Appropriate Digital Tools

If you choose to do interactive writing for your paper, you may need aid of such platforms that support synchronous work as the whole group is writing together such as Google Docs On the contrary, those papers that are written sequentially or the principle author is doing the

writing task predominantly conventional platforms such as Ms Word may also work. At the preliminary stage itself , plan should be created for comments and tracking changes. In addition to the tool for writing, you will have to choose a platform for virtual meetings from time to time. You will need to compare the platforms on specific requirements you might have for your research meetings such as number of participants possible in a single group call, permission to record and save the meetings, screen casting or note making authority. With multiple options available in the present time for virtual interactions, customised options are there at a cost effective manner making it easier to choose.

Commandment 7:

Defining clear realistic and rigid timelines

Deadlines help to maintain the momentum of the group and facilitates on time completion of the paper. When you are a co-author, you must look at the deadlines se by the group leader from your on perspective and see that with all your other commitments will you be able to

live by them. Respect other authors time as well by being on time for meetings, schedules. By developing a culture of positivity and encouragement in the team keeping deadlines becomes easier and staying on task can be accomplished. Always remember that collaborative writing needs more revisions and reading sessions as compared to solo research papers.

Commandment 8:

Communicate effectively with all co-authors

Being transparent and explicit about expectations and deadlines helps to avert misunderstandings in the mind of the authors and helps to keep conflicts at bay. If you are the project leader make all consequences clear to the authors, like when they do not meet deadlines or if someone breaks the group rules. Consequences could vary from change in the authors order or removing authorship. If one co-author does not work well in the team, possible chances are he would not be included in the same team in the forthcoming research.

Commandment 9:

Collaborate with authors from diverse background and design participatory group model

When there are multiple authors in a research , chances are there that they may belong to different cultural or demographic backgrounds. It surely brings in a wider perspective to research and beings inputs from different backgrounds. There may be some language barriers

and they need to be overcome by a more empathetic approach towards the non-native speaker authors to allow them to express their opinions. A well curated participatory group model can be used to crate platforms for opportunity of expression for different personalities in

authorship.

Commandment 10:

Own the responsibility of co authorship

There are benefits in being a co-author and at the same time it comes with responsibilities. You have to owe responsibility of the paper as a whole and that you have checked the accuracy of the paper as a whole. One of the final steps begore submitting a co-authored paper are that all authors have given their consensus on their contribution and approval of the final outcome of the paper and also support its submission for publication finally.

Finally…

Despite a lot of challenges and obstacles in writing multi authored papers that have been discussed above, co-authored papers are the trend and they come with their own set of benefits that cannot be denied. There is no thumb rule in handling the challenges but the above commandments can be read and reread to customise according to the requirements of your team and its vision…If you still have never attempted a co-authored paper, the time is now. Go by the trend in research.

The making of the meshing model using ANSYS

09/01/2023

You want to build a house but before that, you need a blueprint of the house so that you can build it just the way you want. In the paper blueprint, you may not understand it totally but in the 3D blueprint, you can see every corner of the house and also change it just the way you want. Well, this is what ANSYS is. In this blog, we will know the steps to make the meshing model using ANSYS. But before that, let us know a little bit about ANSYS and the meshing model so that we can understand the later part of the blog.

ANSYS is a simulation software that is used to solve a wide range of engineering problems across multiple disciplines, including structural, fluid dynamics, electromagnetic, and thermal analysis. The software can simulate the behaviour of a system under various conditions and can be used to optimise the design of a product or process.

ANSYS provides a wide range of capabilities, such as Finite Element Analysis (FEA), Computational Fluid Dynamics (CFD), and Multiphysics simulation. FEA is used to analyse the structural integrity of a design and can predict how a product will respond to stress, vibration, and other loads. CFD is used to simulate the behaviour of fluids and gases and can predict things like flow patterns, heat transfer, and chemical reactions. Multiphysics simulation allows to couple the of different physics in the same simulation such as thermal-structural, electro-magnetic-structural and so on.

ANSYS can be used in a variety of industries, including aerospace and defence, automotive, electronics, energy, and healthcare. It is widely used by engineers, researchers, and scientists to design, analyse and optimise products, processes and equipment. ANSYS also provides a wide range of pre-processing and post-processing tools that can be used to prepare models, visualise results, and extract data. It also offers many add-ons and specialised software for specific industries and applications.

Identifying the meshing model

Meshing is the process of dividing a model into smaller, simpler shapes called “elements” that can be analysed numerically. This process is also known as “discretization.” The resulting mesh is a collection of interconnected elements that represent the model’s geometry and topology.

In many simulation software like ANSYS, the meshing process is critical to the accuracy and quality of the simulation results. A good mesh will ensure that the solution is accurate, stable, and efficient, while a poor mesh can lead to errors, instability, and slow convergence.

There are different types of meshing techniques, such as structured, unstructured, and hybrid meshes. Structured meshes are composed of simple, regular shapes like squares or hexagons and are often used in CFD analysis. Unstructured meshes are composed of more complex, irregular shapes and are often used in FEA analysis. Hybrid meshes combine both structured and unstructured elements and can be used in multiphysics simulations.

The selection of the mesh type and element size will depend on the simulation type, the complexity of the geometry, and the accuracy required. Meshing can be done automatically or manually, depending on the simulation software and the user’s preference. Many software’s like ANSYS offers both options.

Making the meshing model using ANSYS

Creating a meshing model in ANSYS involves several steps, which can be broadly grouped into three categories: geometry preparation, meshing, and post-processing.

Geometry Preparation: This step involves importing or creating the model geometry in ANSYS. The geometry can be imported from a variety of file formats, such as IGES, STEP, or SolidWorks. Once the geometry is imported, it should be cleaned up and prepared for meshing. This can include tasks such as removing unnecessary details, applying symmetry, or applying boundary conditions.

Meshing: Once the geometry is prepared, the next step is to create the mesh. ANSYS provides several meshing tools, including the ANSYS Meshing application, which can be used to create structured, unstructured, or hybrid meshes. The user can select the type of elements, element size, and other meshing parameters depending on the simulation type and the accuracy required.

Post-processing: After meshing, the user can use ANSYS’s post-processing tools to check the quality of the mesh, and make any necessary adjustments. This can include tasks such as checking for element quality, checking for element distortion, and checking for element skewness.

It’s worth noting that the above steps are a general guide and depending on the specific simulation type, there may be additional steps or variations. The user can also benefit from ANSYS tutorials and documentation for a more detailed understanding. If you want us, fivevidya, to help you, then you can simply reach out to our website https://www.fivevidya.com/ or if you want these free blogs continuously on other topics to improve your knowledge, then you can comment below so that we can continue to provide you free blogs.

Identifying Fuel economy model in MATLAB simulink

12/12/2022

Suppose you have written a program on “how to add two numbers”. Now the input is the coding and the output is adding two numbers. That is the basic difference between MATLAB and Simulink. MATLAB is the programming language and Simulink is the output of the language. In this blog, we will identify the fuel economy model in MATLAB Simulink. But before that, let us get an idea about what MATLAB Simulink is and what the fuel economy model is.

MATLAB Simulink is a graphical programming environment for modelling, simulating and analysing dynamic systems. It allows users to design, simulate, and analyse systems using block diagrams, which are collections of blocks that represent different components of a system. The blocks can represent mathematical operations, physical systems, or even MATLAB code. Simulink is commonly used in the design and simulation of control systems, communication systems, and other engineering systems.

Understanding the fuel economy model

Fuel economy is a measure of how much fuel a vehicle consumes per unit distance travelled. A fuel economy model is a mathematical model that predicts the fuel consumption of a vehicle based on certain input variables. These input variables can include the vehicle’s speed, weight, engine size, and aerodynamic drag, as well as environmental factors such as temperature and altitude.

Fuel economy models can be used to estimate the fuel consumption of a vehicle under different operating conditions, and to evaluate the potential fuel savings of different design or operational changes. They can also be used to compare the fuel efficiency of different vehicles or technologies.

Fuel economy models can be developed using various methods, including experimental testing, simulation and data-driven approaches. They are commonly used in the automotive industry, transportation research, and environmental impact studies.

Identifying the fuel economy model in the MATLAB Simulink

In MATLAB Simulink, the “Fuel Economy” library provides a set of blocks that can be used to model the fuel consumption of a vehicle. This library includes blocks for modelling the engine, transmission, and other powertrain components, as well as blocks for modelling the vehicle’s dynamics, such as its speed and acceleration.

The library also includes a block called the “Fuel Consumption Block” which can be used to calculate the fuel consumption of the vehicle based on the input from other blocks in the model. This block can be configured to use different equations or data sets to model the fuel consumption, and it can output the fuel consumption as a function of time, distance, or other variables.

Simulink also provides a set of “Driveline” libraries which can be used to model the powertrain of the vehicle including the engine, transmission and other components. The library includes blocks for modelling the mechanical and thermal components of the engine, as well as the transmission and driveline. These blocks can be connected to other blocks in the model to simulate the behaviour of the entire powertrain.

The above-mentioned blocks can be combined to create a fuel economy model which can be used to predict the fuel consumption of a vehicle under different operating conditions and compare the fuel efficiency of different vehicles or technologies. We, at fivevidya, can also help you to identify the fuel economy model in MATLAB Simulink as we have helped more than 1000+ students. Just visit https://www.fivevidya.com/ and you will get to know how.

Evaluating the limitations and importance of quasi experiment to formulate better research questionnaire

21/11/2022

A research questionnaire is just formulating some questions for the research respondents so that you can gather and analyze their responses to know about the research topic. Now, if we do not have the idea to create research questionnaires, then how will we create better research questions? Well, there is a certain type of experiment called “quasi-experiment” that can help us formulate better questions. But is it effective? Does it have any limitations? In this blog, we will identify the limitations and importance of quasi-experiments to formulate better research questionnaires.

Describing quasi-experiment

A quasi-experiment is a type of study that resembles an experimental study but does not meet all of the requirements for a true experiment. In a true experiment, the researcher randomly assigns participants to different groups and manipulates an independent variable to observe its effect on a dependent variable. In a quasi-experiment, the researcher does not have control over the assignment of participants to groups, but still observes the effect of an independent variable on a dependent variable. Quasi-experiments are often used in naturalistic settings where it is not possible or ethical to manipulate the independent variable.

Evaluating the limitations and importance of quasi-experiment to formulate a better research questionnaire

Quasi-experiments are important in formulating research questions because they can be used to investigate causality in situations where true experiments are not feasible. For example, in a quasi-experiment, a researcher might be interested in studying the effect of a new educational program on student achievement. Because it would not be ethical to randomly assign students to either receive or not receive the program, the researcher would instead use existing groups of students who are already participating in the program and compare their achievement to a similar group of students who are not participating. This allows the researcher to draw causal inferences about the program’s effectiveness, despite the lack of random assignment. In addition, Quasi-experiments can also help to build the case for more rigorous, true experiments that can be carried out later.

There are several limitations to using quasi-experiments to formulate research questions. Some of the main limitations include:

1. Selection bias: As participants are not randomly assigned to the experimental and control groups, there may be systematic differences between the groups that could affect the results.

2. Lack of control: Quasi-experiments typically have less control over the conditions of the study than true experiments, which can make it more difficult to isolate the specific factors that are affecting the outcome.

3. Difficult to establish causality: Without random assignment, it can be difficult to establish causality and rule out alternative explanations for the results.

4. Generalizability: Quasi-experiments are often conducted in specific settings or with specific populations, which limits their generalizability to other settings or populations.

5. Confounding variables: Quasi-experiments are more likely to have uncontrolled confounding variables that might affect the outcome.

Quasi-experiments can be a useful research method in certain situations, but it’s important to be aware of their limitations and the potential for bias when interpreting the results.

Finally, we can say that a quasi-experiment is a little different from other experiments as you need to have thorough knowledge about them. If you want us, fivevidya, to help you, then you can simply reach out to us from our website https://www.fivevidya.com/ if you want to learn more about quasi-experiment, you can comment below so that we can provide you with free in-depth blogs on it.

Understanding the use of one way ANOVA to conduct quantitative data analysis

10/10/2022

Suppose you have started a business and you want to reach out to investors for money. They will see your year-by-year or month-by-month growth rate, your profit margin, your revenues, and other business financials. These are all numbers which are called quantitative data that are used to make business decisions or even real-life decisions. One-way ANOVA, which is a variance analysis test, can help us to conduct quantitative data analysis. In this blog, we will learn the use of one-way ANOVA to conduct quantitative data analysis. But first, let us know technically what quantitative data analysis is in short so that you don’t face any problems while going through the blog and then we will proceed with the definition and use of one-way ANOVA.

Quantitative data analysis is the process of using statistical and mathematical techniques to analyze numerical data in order to draw conclusions and make inferences about the data. This type of analysis is used to identify patterns, relationships, and trends in the data, and can be used to test hypotheses and make predictions about a population or a phenomenon.

There are various methods that can be used in quantitative data analysis, including:

1. Descriptive statistics: These methods are used to summarise and describe the data, such as calculating measures of central tendency (e.g. mean, median) and dispersion (e.g. standard deviation).

2. Inferential statistics: These methods are used to make inferences about a population based on a sample of data, such as hypothesis testing and estimation.

3. Multivariate analysis: These methods are used to analyze relationships between multiple variables, such as factor analysis, cluster analysis, and regression analysis.

Quantitative data is usually collected through methods such as surveys, experiments, and structured observation. The data collected is numerical and can be easily processed by computer software for statistical analysis.

What is one-way ANOVA?

One-way ANOVA (Analysis of Variance) is a statistical method used to determine if there is a significant difference in the mean of a dependent variable (also known as the outcome variable) among two or more independent groups (also known as categories or levels of a factor). It is a way to test whether the means of two or more groups are equal, or if there is a significant difference between them.

One-way ANOVA is used when you have one independent variable (i.e. factor) with two or more levels and one dependent variable. It is used to test the null hypothesis that the means of the groups are equal, against the alternative hypothesis that at least one mean is different from the others.

Ways in which one way ANOVA can be used to conduct quantitative data analysis

One-way ANOVA is a statistical method used to conduct quantitative data analysis in order to determine if there is a significant difference in the mean of a dependent variable among two or more independent groups. It is commonly used when you have one independent variable (i.e. factor) with two or more levels and one dependent variable.

The basic steps in conducting a one-way ANOVA include:

a. Defining the research question and stating the null and alternative hypotheses.

b. Collecting and cleaning the data.

c. Checking for normality in the data, one-way ANOVA assumes normality in the data, so it’s important to check for normality before running the analysis.

d. Computing the mean and standard deviation for each level of the independent variable.

e. Performing the ANOVA test using statistical software or a calculator.

f. Interpreting the results.

The output of the one-way ANOVA will include the F-ratio, the p-value, and the degrees of freedom. The F-ratio is used to determine whether to reject or fail to reject the null hypothesis, and the p-value is used to determine the level of statistical significance. The degrees of freedom are used to determine the critical value from the F-distribution table.

If the p-value is less than the level of significance (usually 0.05) and the F-ratio is greater than the critical value, the null hypothesis is rejected, indicating that there is a significant difference in the mean of the dependent variable among the groups. If the p-value is greater than the level of significance, the null hypothesis is not rejected, indicating that there is no significant difference in the mean of the dependent variable among the groups.

In summary, one-way ANOVA is a powerful tool for quantitative data analysis that allows researchers to test hypotheses about the difference in means among two or more groups, and it is useful in many fields such as psychology, biology, economics, marketing, and many others.

Dealing with the risk of over-interpreting or under-interpreting qualitative data- the grounded theory approach

08/09/2022

What is the real reason that makes us happy? It’s not our salary or our business’ balance sheet numbers, it’s our environment, our relationships, and other personal or professional reasons. Now, identifying and analyzing this data is qualitative data basically. Now, what is the guarantee that the gathered data is correct? Suppose even if we interview some person, what is the guarantee that the person has provided us with the correct data? That is the risk of over-interpreting or under-interpreting qualitative data. In this blog, we will deal with the risk of over-interpreting or under-interpreting qualitative data by using a grounded theory approach. Now, let us know qualitative data so that we can easily understand this topic.

Qualitative data is non-numerical information that can be collected through methods such as interviews, focus groups, observations, and written or visual materials. It is used to understand and describe the characteristics of a particular group or phenomenon and can provide insight into people’s attitudes, beliefs, behaviors, and motivations. Examples of qualitative data include transcripts of interviews, field notes from observations, and written responses to open-ended survey questions.

Understanding the grounded theory approach

Grounded theory is a research method that is used to generate a theory that explains a phenomenon by analyzing data collected from a variety of sources. The theory that is developed is “grounded” in the data, meaning that it is directly derived from the data and is not imposed on the data. The grounded theory approach is commonly used in the social sciences and is particularly popular in the field of sociology.

The grounded theory approach typically involves several phases, including:

1. Data collection: Data is collected from a variety of sources, such as interviews, observations, and written materials.

2. Data analysis: The data is analyzed and coded in order to identify patterns and themes.

3. Theory development: A theory is developed that explains the phenomenon being studied and is supported by the data.

4. The grounded theory: The approach is often used when there is little existing theory on a topic and when the researcher wants to understand a phenomenon from the perspective of the people experiencing it.

Identifying and resolving the risk of over-interpreting or under-interpreting qualitative data by using the grounded theory approach

Grounded theory is a widely used approach for analyzing qualitative data that can help researchers avoid the risk of over-interpreting or under-interpreting the data.

To identify and resolve the risk of over-interpreting, researchers using grounded theory should follow the guidelines of the constant comparative method, which involves comparing data within and across cases to identify patterns and themes. This approach helps researchers to generate a theory that is grounded in the data, rather than imposing preconceived ideas or theories on the data.

To avoid under-interpreting, researchers should use open coding to identify patterns and themes in the data, and then use axial and selective coding to connect these patterns and themes to broader theoretical concepts. Additionally, researchers should use theoretical sampling to guide data collection and analysis, to ensure that they are collecting data that is relevant to the emerging theory.

It’s also important to mention that another key aspect of grounded theory is memoization, which allows the researcher to document their thoughts and ideas as they are analyzing the data; this will help in reflective practice and can help identify a researcher’s own biases.

The grounded theory portrays an essential role in reducing the risk of over-interpreting or under-interpreting qualitative data. We, at fivevidya, can help you to deal with the risk at an affordable price. Furthermore, we will also teach you how to practically deal with the risk in the future if you require it. Please visit our website https://www.fivevidya.com/.

Collecting qualitative data using interviews for PhD research – A complete guide

11/08/2022

Have identified disciplinary orientations and design for the investigation, a researcher gathers information that will address the fundamental research question. Interviews are very common from data collection incase study research. Interviews are individual or groups allow the researcher to attain rich, personalized information (Mason, 2002). To conduct successful interviews, the researchers should follow several guidelines.

First, the researcher should identify key participants in the situation whose knowledge and opinions may provide important insights regarding the research questions. Participants may be interviewed individually or in groups. Individual interviews yield significant amounts of information from an individual’s perspective, but may be quite time-consuming. Group interviews capitalize on the sharing and creation of new ideas that sometimes would not occur if the participants were interviewed individually; however, group interviews run the risk of not fully capturing all parattrition in her school would need to weigh the advantages and disadvantages of interviewing individually or collectively select students, teachers administrators, and even the student’s parents.

Second, the researcher should develop an interviewguide (sometimes called an interview protocol). This guide will identify appropriate open ended questions that the researcher will ask each interview. These questions are designed to allow the researcher to gain insights into the study’s fundamental research question; hence, the quantity of interview questions for a particular interview varies widely. For example, a nurse interested in his hospital’s potentially discriminatory employment practices may qualify do you seek in your employees? How do you ensure that you hire the most qualified candidates for positions in your hospital? and How does your hospital serve ethnic minorities?

Third, the researcher should consider the setting in which he or she conducts the interview. Although interviews in the natural setting may enhance realism, the researcher may seek a private, neutral, and distraction-interview location to increase the comfort of the interview and the likelihood of attaining high-quality information. For example, technology specialists exploring her organization’s computer software adoption procedures may elect to question her company’s administrators’ separate office rather than in the presence of coworkers.

Fourth, the researcher should develop a means for recording the interview data. Handwritten notes sometimes suffice, but lack of detail associated with this approach inevitably results in a loss of valuable information. The way to record interview data is to audiotape for audiotaping , however, the researcher must obtain the participant’s permission. After the interview, the researcher transcribes the recording for closer scrutiny and comparison with data derived from other sources.

Fifth, the researcher must adhere to legal and ethical standards for all research involving people. Interviews should not be deceived and are protected from any form of mental, physical, or emotional injury. Interviews must provide informed consent for their participation in the research. Unless otherwise required by law or unless interviews consent to public identification, information obtained from an interview should be anonymous and confidential. Interviews have the right to end the interview and should be debriefed by the case study researcher after the research has ended.

Interviews may be structured, semistructured, or unstructured. Semi Structured interviews are particularly well-suited for case study research. Using this approach, researchers ask predetermined but flexibly worded questions, the answers to which provide tentative answers to the researcher’s questions. In addition to posing predetermined questions, researchers using semistructured interviews ask follow-up questions designed to probe deeper issues of interest to interviews. In this manner, semi structured interviews invite them to express themselves openly and freely and to define the world from their own perspectives, not solely from the perspective of the researcher.

Identifying and gaining access to interviews is a critical step. Selections of interviews directly influences the quality of the information attainted. Although availability is important, this should not be the only criterion for selecting interviews. The most important consideration is to identify those persons in the research settings who may have the best information with which to address the study’s research questions. Those potential interviews must be willing to participate in an interview. The researcher must have the ability and resources with which to gain access to the interviews. When conducting an interview, a researcher should accomplish several tasks.First,she should ensure that she attains the consent of the interviewee to proceed with the interview and clarify issues of anonymity and confidentiality,Second,she should review with the interviewee may except to view results of the research of which this interview is a part.While asking questions, the researcher should ask only open-ended questions while avoiding yes/no questions,leading questions or multiple part questions .Finally, the researcher should remember that time spent talking to the interviewee .In other words,the researcher should limit her comments as much as possible to allow more time for the interviewee to offer his perspectives

Interviews are frequently used when doing case study research .The researcher is guided by an interview guide and conducts the interview in a setting chosen to maximize the responsiveness of those being interviewed .Responses are written down or electronically for later review and analysis .when conducting interview,researchers are careful not to violate legal or ethical protections.While interviewees are widely used,other methods are also used to gather data in case study research.